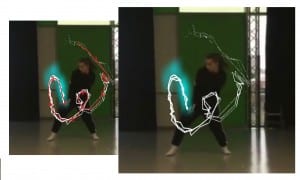

Before I’d had a chance to book out expensive equipment, I crafted a makeshift rig to experiment with motion tracking the dancers. This consisted of a front and side-view camera to record the stage, and red ribbon tied to the dancers leg and arm, which would be tracked through after effects. In the footage, I’ve isolated the red ribbon to make it easier to motion track. Because there are 2 video feeds, there are 2 X and Y values, they can be combined to generate a simulation of X, Y, and Z value in After Effects. Though slightly inaccurate, it manages to capture the 3 dimensional movement of the band. These values can of course also be applied to other to other factors of animation.

A better way of capturing and importing this movement is with a Kinect. The video belowexplains how a couple created a tool for creating 2.5D skeletal meshes in after effects, from Kinect input. If we capture the dancer this way, there would be a simple means of generating animation linked to dance movements.

I showed them the prototypes and examples of animation that I had made prior to this meeting. These concept animations were made to give clear examples of what I could create to Zoe and the dancer. They proceeded to produced sequences of movement inspired from the animations, while Shane and I projected them onto a screen. The director gave feedback, and this allowed us to begin experimenting with animations we would later test with.

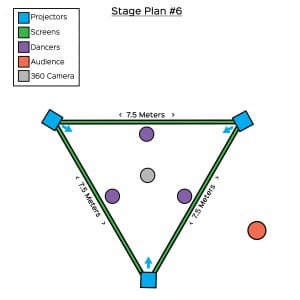

I showed them the prototypes and examples of animation that I had made prior to this meeting. These concept animations were made to give clear examples of what I could create to Zoe and the dancer. They proceeded to produced sequences of movement inspired from the animations, while Shane and I projected them onto a screen. The director gave feedback, and this allowed us to begin experimenting with animations we would later test with. The stage we have decided to work with is a triangular in-the-round, with 3 screens surrounding it. Shane stated to formulated and experiment with small-scale prototypes of this. Different models provided ideas for the space it would require for front or back projections, and type of projectors needed to achieve this in large-scale. It also gave basis for modelling the projection mapping; how the animations would work in accordance to the structure of the stage, in practical and artistic terms.

The stage we have decided to work with is a triangular in-the-round, with 3 screens surrounding it. Shane stated to formulated and experiment with small-scale prototypes of this. Different models provided ideas for the space it would require for front or back projections, and type of projectors needed to achieve this in large-scale. It also gave basis for modelling the projection mapping; how the animations would work in accordance to the structure of the stage, in practical and artistic terms. Digitally adapting the performance is something me and Shane discussed. As the stage is in-the-round, ordinarily the audience would watch from the outside-in, however, introduction of a 360-degree camera placed above the centre of the dancers, but lower than the screens, would allow the audience to watch from the inside-out. This would be ideally be viewed through a VR headset, placing the audience in the centre of the action. I think this could be used to broaden Zoe’s theme of escapism. Shane suggested live-streaming the performance on Facebook. Facebook has recently introduced the ability to view and stream 360-degree video. Wider audiences could view the performance live on Facebook and look around it. This could be especially accessible, yet effective through a smartphone or tablet.

Digitally adapting the performance is something me and Shane discussed. As the stage is in-the-round, ordinarily the audience would watch from the outside-in, however, introduction of a 360-degree camera placed above the centre of the dancers, but lower than the screens, would allow the audience to watch from the inside-out. This would be ideally be viewed through a VR headset, placing the audience in the centre of the action. I think this could be used to broaden Zoe’s theme of escapism. Shane suggested live-streaming the performance on Facebook. Facebook has recently introduced the ability to view and stream 360-degree video. Wider audiences could view the performance live on Facebook and look around it. This could be especially accessible, yet effective through a smartphone or tablet.