Today we aimed on building animations that were more responsive with the dancer’s movement. In After Effects, using video of Zoe performing a sequence of repetitive movement, I’ve used motion tracking, along with a path, to create a sprite that moves with accordance to the dance movements. The path is at first based on her hand movements. Then, as she throws it, the particles fly off and follows its own path.

Because After Effects uses JavaScript, it is possible to pick-whip these values and simply apply them to values of other effects and presets, such as size, opacity, and much more. Recently, I’ve been experimenting with creating animation with values from the audio amplitude in music. The videos below are example of this, and are explained more below. Expanding on the capabilities of motion tracking to create animation, the motion tracking in After Effects provides the X and Y positions as a numerical values, meaning they could be used to create a more abstract animation that is still in accordance to the movement of the dancer. Projected onto a stage, this would be a choreographed performance of digital deconstruction and reconstruction of human form, the true organic counterparts, and their response to one another.

This was an example i gave to the dancers. I used it to explain how I’d turned audio

amplitude into key-frames with numerical values. Here, those values were applied

to the scale and opacity of a ring.

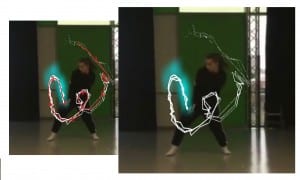

As a more advanced example, I provided a rendering of particle layers. Each layer

has its own unique values such on velocity, longevity, opacity, and so on. The result

provided richer animation and variety of motions that feel very organic and in tune

with the music.